Light-Speed Logic: Photonic Quantum Computing Explained

Light itself can be used to perform quantum computations, carrying information at unparalleled speeds while resisting many sources of noise that affect other qubit types. In photonic quantum computing, researchers use photons to execute quantum algorithms, transmit quantum states over long distances, and explore architectures that can operate at or near room temperature.

The approach is based on decades of optical communication technology, repurposing components like waveguides and beam splitters for quantum operations. With ongoing progress in photon sources, detectors, and integrated photonics, this field is moving from experimental setups toward scalable systems.

What Is Photonic Quantum Computing?

Photonic quantum computing is a quantum computing approach that uses photons, particles of light, as qubits to encode and process information. Photonic qubits can be represented in several ways, including polarization (horizontal/vertical states), path (which optical path a photon takes), and time-bin (the arrival time of a photon within a pulse sequence). These encoding methods allow quantum information to be manipulated and transmitted over long distances with low loss and minimal decoherence.

A photonic quantum computer relies on several core components.

- Photon sources generate the qubits, often through deterministic single-photon emitters or probabilistic methods such as spontaneous parametric down-conversion.

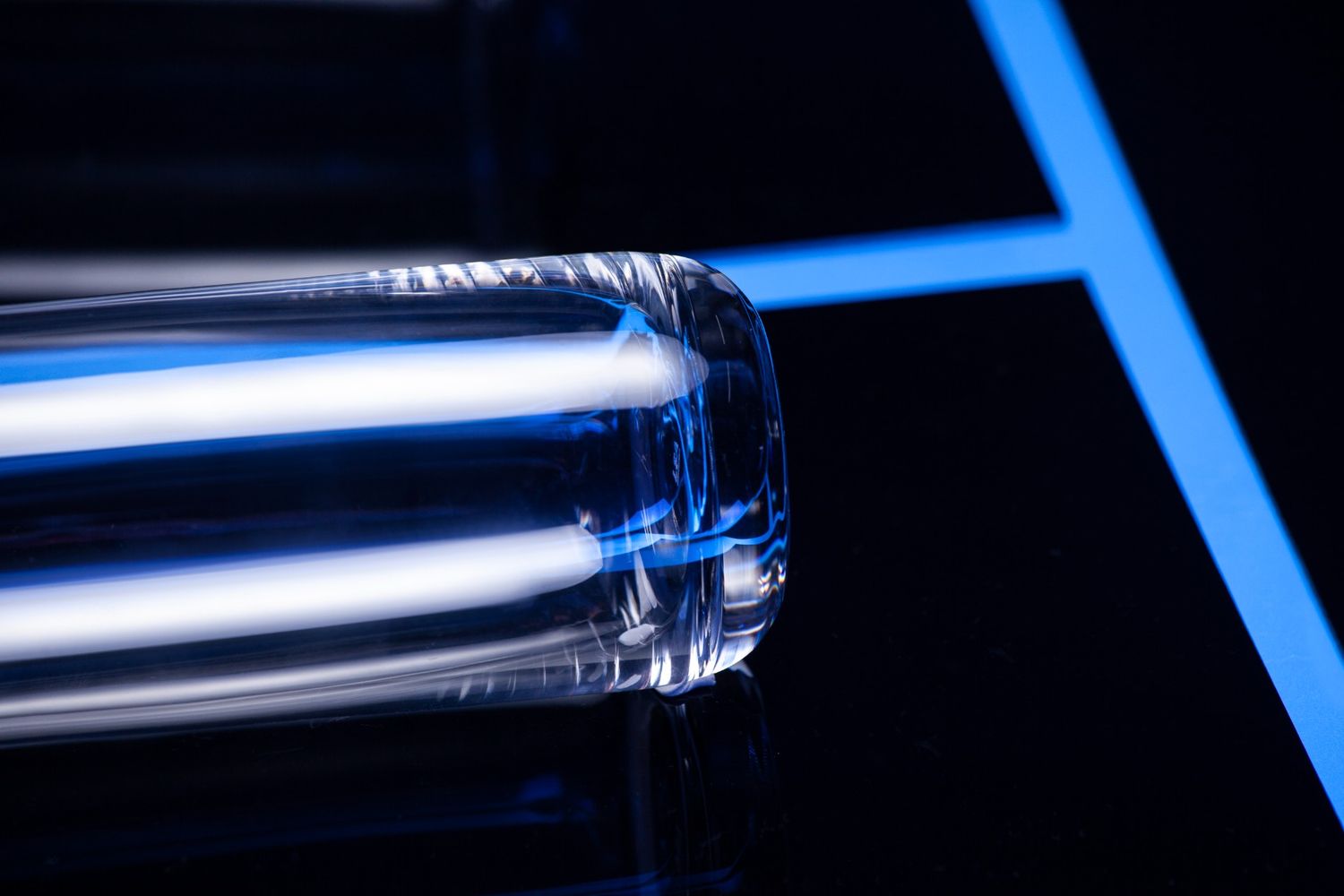

- Waveguides and beam splitters direct and combine photons, allowing for controlled interference patterns that are essential for computation.

- Detectors measure photon states, with single-photon detectors providing the necessary sensitivity for quantum readout.

Quantum logic gates in photonic systems can be implemented using linear optics, such as in the Knill–Laflamme–Milburn (KLM) scheme, which uses interference and measurement to induce effective nonlinearities, or through direct non-linear optical interactions.

Photonic Computing Vs Quantum Computing

Photonic computing and quantum computing are related yet different concepts. Photonic computing refers to using photons for information processing, which can be applied in both classical and quantum contexts. In classical photonic computing, light is used to carry out operations faster or more efficiently than with electronics.

Quantum computing, on the other hand, exploits quantum mechanics, such as superposition and entanglement, to solve problems beyond classical capabilities. Photonic quantum computing is essentially a subset of quantum computing where photons serve as qubits. So, while all photonic quantum computing falls under quantum computing, not all photonic computing is quantum in nature.

Advantages of Photonic-Based Quantum Computing

Photonic quantum computing has several advantages over other quantum architectures. These advantages have to do with the unique properties of photons and the compatibility of optical technologies with high-speed quantum systems.

Potential for Scalability

Photonic quantum computing makes it possible to scale up quantum systems without the extreme hardware overhead of other architectures. Photons, being massless and non-interacting particles, can travel long distances without losing coherence, making them ideal for building large, distributed quantum networks. This paves the way for modular systems where additional quantum processors can be linked together via optical fiber, boosting computational capacity without physical proximity.

Integrated photonic chips, similar to those used in modern telecommunications, allow for packing thousands or even millions of optical components onto a single wafer. Unlike cryogenic setups that require massive cooling systems, photonic systems can operate at or near room temperature, overcoming one of the biggest barriers to mass deployment. This scalability advantage makes photonics potentially ideal for both industrial and commercial quantum computing use cases.

Minimal Cooling Requirements

One of the main challenges in quantum computing is maintaining qubit stability. This often requires systems to be cooled to near absolute zero. Photonic quantum computers, however, do not rely on superconducting circuits or trapped ions that need extreme refrigeration. Since photons are naturally resistant to thermal noise, they can operate at room temperature or with minimal cooling. This eliminates the need for bulky and expensive dilution refrigerators, lowering both cost and energy consumption. As a result, photonic quantum systems are easier to deploy in various environments, including dedicated research labs and possibly cloud-based data centers.

Minimal cooling requirements also mean reduced maintenance overhead, which allows for more focus on scaling and algorithm development rather than thermal stability. This makes photonic quantum computing more accessible to startups, research institutions, and industries without specialized infrastructure.

Compatibility With Current Telecom Infrastructure

Photonic quantum computing goes hand in hand with existing telecommunications technology. The same fiber-optic cables that carry internet data can also transmit photonic qubits, allowing quantum processors to be linked across cities, or even continents, without the need for entirely new infrastructure. This compatibility minimizes the cost and time required for large-scale deployment. Telecom-grade optical components such as wavelength multiplexers, splitters, and amplifiers are already widely available and manufactured at scale, meaning photonic quantum devices can benefit from decades of advancements in the telecom sector.

Moreover, because telecom infrastructure is designed for high-speed, low-loss transmission over long distances, it naturally supports the secure transfer of quantum information, a key requirement for quantum communication networks. This bridges the gap between current classical networks and future quantum networks, making photonic-based quantum computing the most integration-ready quantum technology in development today.

Speed-of-Light Operations

Photonic quantum computing quite literally operates at the speed of light. Photons move through optical circuits and fibers with very little resistance, allowing for extremely fast data transmission and gate operations. In distributed quantum networks, where processors must exchange quantum states in real-time, photons guarantee minimal latency and near-instantaneous communication. This is key to time-sensitive applications such as quantum cryptography, where security protocols depend on rapid key exchanges.

On top of that, the ability to transmit qubits at light speed allows for highly responsive cloud-based quantum computing, where remote users can interact with quantum processors almost instantly. While the ultimate processing speed also depends on the efficiency of quantum gates and error correction, photons provide a natural upper limit that is unmatched by matter-based qubits.

Challenges in Photonic Quantum Computing

Despite being a promising technology, photonic quantum systems face fundamental challenges that must be addressed before large-scale systems become practical. These challenges include:

Difficulty Producing Deterministic Single Photons

One of the biggest technical hurdles in photonic quantum computing is generating deterministic single photons on demand. Current methods, such as spontaneous parametric down-conversion, produce photons probabilistically, which means that not every attempt yields a usable qubit. This forces engineers to build complex multiplexing systems or accept lower efficiency, ultimately affecting computational speed and scalability.

Deterministic sources—devices that produce exactly one photon at the desired time—are under active research, including quantum dots and trapped-atom emitters. However, these technologies are not yet mature enough for large-scale commercial deployment. Without a reliable way to produce single photons consistently, quantum algorithms can be restricted by incomplete data sets or require major redundancy, both of which reduce efficiency.

Losses in Optical Components

Photon loss is a very persistent issue in photonic quantum systems. Every mirror, beam splitter, and waveguide introduces some level of attenuation, and over multiple operations, these losses can become significant. In a quantum computer, losing even a single photon can mean losing the quantum information it carries, which results in computation errors. While state-of-the-art integrated photonic circuits have made progress in reducing these losses, the challenge remains when scaling up to larger systems.

Fiber-optic transmission over long distances, though efficient, is still subject to gradual loss. This limits the reach of quantum communication without repeaters or error correction. Minimizing losses will require ultra-low-loss materials, improved fabrication techniques, and better coupling between optical components.

Need for High-Efficiency Detectors

Accurately reading out quantum information in a photonic system depends on the performance of single-photon detectors. These detectors must be efficient enough to capture nearly every incoming photon and extremely fast to handle high-throughput operations. Even a small drop in efficiency can reduce the overall success rate of quantum computations.

Current superconducting nanowire single-photon detectors (SNSPDs) offer some of the best performance, but they require cryogenic cooling. Efforts are in progress to develop room-temperature alternatives with comparable efficiency, but the technology is not yet mature. High-efficiency detectors also need to have low dark counts (false positives) and precise timing resolution to maintain data integrity.

Error Correction in Photonic Systems

While photons are less prone to certain types of decoherence compared to matter-based qubits, they are still prone to loss and imperfect gate operations. Traditional quantum error correction codes often require large numbers of physical qubits to protect a single logical qubit, which is difficult when photon sources and detectors are not perfectly efficient. Photonic-specific error correction methods, such as bosonic codes and multiplexed entanglement schemes, have some potential but are still in early experimental stages.

Also, implementing error correction in real-time requires extremely precise synchronization and control over optical pathways. Without effective and scalable error correction, photonic quantum computers will struggle to achieve the fault tolerance necessary for commercially relevant computations.

Applications of Photonic Quantum Technology

Photonic-based quantum technology has potential applications across various industries. Its ability to transmit and process quantum information quickly and with low decoherence makes it ideal for fields that require speed, security, and scalability.

Secure Quantum Communication

Photonic quantum computing is naturally suitable for secure quantum communication, especially through Quantum Key Distribution (QKD). Since photons can travel long distances through optical fibers without losing coherence, they are ideal carriers of quantum information. QKD protocols, such as BB84, use the quantum properties of photons to detect any eavesdropping attempts. If a third party tries to intercept the photons, their quantum states collapse, alerting both sender and receiver. This makes communication virtually unhackable at the fundamental physics level, rather than relying solely on mathematical encryption. Since photonic systems easily integrate with existing telecom infrastructure, deploying quantum-secure communication networks becomes more feasible and cost-effective.

Photonic quantum communication has the potential to eventually set the foundation for a global quantum internet. This would allow for secure connections between quantum computers, research facilities, financial institutions, and governments across continents.

High-speed Optimization Problems

Many industries often come across complex optimization problems that are computationally expensive for classical computers. Photonic-based quantum computing can address these challenges more efficiently with the help of quantum parallelism and the high-speed nature of photon-based processing. For example, photonic processors can explore multiple solutions at the same time, minimizing the time it takes to identify the optimal one.

Algorithms designed for combinatorial optimization, such as the Quantum Approximate Optimization Algorithm (QAOA), can run faster on photonic quantum computing hardware because of low latency and rapid gate operations. This could lead to breakthroughs in areas like real-time traffic routing, supply chain management, portfolio optimization, and energy grid balancing.

Chemistry and Materials Science

Simulating molecular interactions is one of the most promising applications for quantum computing, and photonic systems can do wonders in this domain. Photonic quantum processors can represent and manipulate quantum states directly, allowing for more accurate simulations of molecular bonds, energy transfers, and reaction pathways. This can accelerate the discovery of new drugs, catalysts, and high-performance materials by allowing scientists to test hypotheses virtually before moving to physical experiments.

The low decoherence rates of photons also make them reliable for running complex simulations without considerable loss of accuracy. In the long term, photonic quantum computing could become valuable in pharmaceuticals, renewable energy technologies, and advanced manufacturing materials.

Advancements in Photonic Quantum Systems

Recent breakthroughs in photonic quantum technology are pushing the field from research to real-world applications—with a number of photonic quantum computing companies actively involved. In June 2025, researchers unveiled a CMOS‑compatible “quantum light factory” on a 1 mm² silicon chip, complete with microring resonators that generate photon pairs alongside built-in stabilization and control systems. This scalable, semiconductor‑friendly design could pave the way to a manufacturable platform for photonic quantum computing.

Meanwhile, multiphoton boson sampling, single-photon sources, and on-chip interferometers are being developed as reconfigurable platforms for programmable, cloud-accessible photonic computing. In fact, a six-photon photonic processor named Ascella, built by photonic quantum computing company Quandela with on-demand quantum dot sources, demonstrated state-of-the-art performance and stability through the Quandela Cloud platform.

Major industry players are also making progress:

- PsiQuantum, in collaboration with GlobalFoundries, has demonstrated high-fidelity chip-to-chip photonic qubit interconnects and introduced its “Omega” chipset with wafer‑scale fabrication, pushing toward million‑qubit-scale systems.

- Xanadu is working on continuous-variable photonics and boson sampling to deliver cloud-based access through its Borealis system and strengthen its developer ecosystem with open-source tools like PennyLane.

- Aegiq has engineered deterministic single-photon sources using quantum dots, advancing modular, scalable architectures.

How BlueQubit Is Advancing Photonic Quantum Technology

As a quantum computing platform, BlueQubit allows for research in photonic quantum computing by providing a development environment that can interface with different quantum technologies, including photonic systems. Its software stack makes it easy for researchers to design quantum circuits, run simulations, and test algorithms that may later be executed on photonic devices. This includes compatibility with standard quantum programming frameworks, allowing for integration into existing research workflows.

By allowing for the simulation and modeling of photonic circuits, the platform helps researchers explore approaches to address current technical challenges, such as loss and scaling constraints. These capabilities allow the research community to experiment with photonic quantum computing concepts in a controlled environment before moving on to real-life applications.

To Sum Up

Photonic quantum computing is moving from specialized laboratory experiments to a viable pathway for scalable quantum systems. By using mature optical technologies and addressing challenges such as photon loss, source reliability, and detector efficiency, researchers are working toward architectures that can integrate with existing communication infrastructure. As integrated photonics and error mitigation continue to advance, high-speed quantum computation becomes more practical by the day.

Frequently Asked Questions

How does photonic quantum computing work?

Photonic quantum computing uses particles of light—photons—as qubits to encode and process quantum information. These photons can carry information in properties such as polarization, phase, or time-bin, and are manipulated using optical components like beam splitters, waveguides, and phase shifters. Quantum logic gates are implemented by controlling the way photons interact with each other, often using integrated photonic circuits on semiconductor chips.

Is photonic computing possible?

Yes—photonic computing is not only possible but already being demonstrated in laboratories and through cloud-accessible platforms. While fully fault-tolerant photonic quantum computers are still in development, companies like Xanadu and Quandela have built functioning photonic processors that can run quantum algorithms. These systems benefit from advances in integrated photonics, deterministic single-photon sources, and high-efficiency detectors.

What is the first photonic quantum computer?

The title of “first photonic quantum computer” depends on the definition. In 2020, Xanadu launched Xanadu Quantum Cloud, giving public access to Borealis, a photonic quantum processor capable of performing Gaussian boson sampling with a record number of photons. Meanwhile, in Europe, Quandela introduced MosaiQ to OVHcloud in 2024, which was the first commercial deployment of a photonic quantum computer on the continent.